Wondering how to sort a list in python? Want to learn different algorithms to sort a list in different time complexities?

Great! You are at the right place. Here in this article, I will explain to you different Sorting algorithms implemented in Python language along with their codes. This article will be a one-stop solution for you to know everything about sorting algorithms in Python.

Sorting refers to the arranging or ordering of data elements in a particular order. It can be done on Numbers, Alphabets and even on Records. Sorting a list helps to lessen our work by ordering data in an increasing or decreasing fashion according to some linear relationship among the data items. Sorting reduces the time to look up or search for some data in a list. For example, think of a dictionary or a phone directory. The words in a dictionary are organized in alphabetical order. This order helps you to easily find the word you are looking for among thousands of different words.

So, here in this article, I am going to explain to you various sorting algorithms along with their Codes in python. These algorithms will be of different time complexities. Also, you will see a Pre-defined Sorting library in Python. Along with every algorithm, you will see a detailed explanation of the working of that algorithms and also the Asymptotic time it takes to run successfully on a list of N elements. So, let’s start with our article and learn “How to sort a list in Python.

Types of Sorting Algorithms in Python

There are many different types of Sorting algorithms available in Python that you can implement in your Code. Algorithms are classified into different types using their time complexities. Time complexities define the time of an algorithm it takes to successfully sort a list of N elements. Various sorting algorithms have different time complexities to successfully sort a list. The algorithms in this article are mainly classified into two types: The N² Sorting Algorithms and The NLOGN Sorting Algorithms.

The sorting algorithms that we are going to discuss in this article will belong to any one of the mentioned Time Complexities. Below given is the list of Sorting Algorithms that I am going to discuss in this article.

- Selection Sort

- Bubble Sort

- Insertion Sort

- Merge Sort

- Quick Sort

- Heap Sort

- sort() or sorted() Python Functions

Along with these algorithms, you will also learn how do they work and what is the time complexity of these algorithms. So without wasting any further time, let’s jump straight to our different Sorting algorithms and their implementations in Python.

Selection Sort in Python

Selection Sorting algorithms is one of the simplest algorithms to sort a list in Python. In this sorting technique, first, we make a list of unsorted or scrambled elements. You can sort this list by finding the smallest element of the list and append it at the beginning of the list. You can even take two lists, the other one being empty. Find the smallest element in the first one and insert it into the second list. So, let’s see the sort list algorithm in Python. Following is the Code of Selection Sort implemented in Python.

# Python program for implementation of Selection

# Sort

A = [64, 25, 12, 22, 11]

# Traverse through all array elements

for i in range(len(A)):

# Find the minimum element in remaining

# unsorted array

index = i

for j in range(i+1, len(A)):

if A[index] > A[j]:

index = j

# Swap the found minimum element with

# the first element

A[i], A[index] = A[index], A[i]

# Driver code to test above

print ("Sorted array")

for i in range(len(A)):

print(A[i]),

Code Explanation for Selection Sort

In this section, I am going to explain to you the working of the above Python code. Python Codes are generally very simple and easy to understand. The comments between the code lines are useful to understand what the particular section of the code does. So, let’s understand what is the code trying to do here.

- Firstly, I have pre-defined a list with 5 elements.

- The next step is to find the minimum of the list i.e the smallest element of the list.

- With the nested for loop, we compare each element of the list with one another.

- The variable index is assigned the smallest element after each comparison.

- After the loop ends, the index will be the smallest element of the list.

- The third and the last step swap the index element with the first element of our list.

- Thus arranging them in ascending order.

- This is repeated until the first for loop reaches the last element. Thus comparing the last element with every other element in the list.

- Lastly, the sorted array is printed for you.

You can reverse the order of this sorting algorithms i.e you can sort in descending order simply by changing the relational operator. Change the operator from smaller than to greater than in the 12th line of the Python code.

Time Complexity for Selection Sort

The time complexity of the Selection sort algorithm can easily be found by examining the for loops in the code. To sort the elements of a list, the code uses one for loop to iterate through each element of the list and the other to compare one element to every other element of the list. For a list with N elements, the outer loop iterates n times. The inner loop iterate N-1 when i is equal to 1, and then N-2 as i is equal to 2 and so forth.

The number of comparisons are “(N – 1) + (N – 2) + . . . . + 1” which gives the Selection Sort a time complexity of O(N²).

Bubble Sort in Python

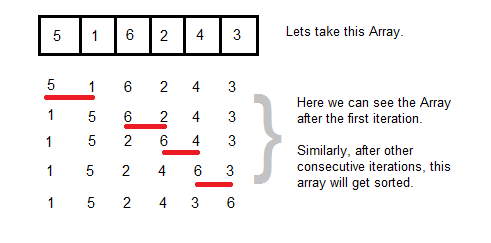

Bubble Sorting algorithms is also an algorithm to sort a list in Python. In this Sorting technique, you select consecutive pairs of two elements in a list and compare them to arrange in a particular order. In other words, in this technique adjacent elements are repeatedly swapped if they are in the wrong order. N passes are done over a list to get the list perfectly sorted. The code implementation of Bubble sort in python is very simple. So, let’s see the sort list algorithm in Python. The Python code for Bubble Sort is given below.

# Python program for implementation of Bubble Sort

def bubbleSort(arr):

n = len(arr)

# Traverse through all array elements

for i in range(n):

# Last i elements are already in place

for j in range(0, n-i-1):

# traverse the array from 0 to n-i-1

# Swap if the element found is greater than the next element

if arr[j] > arr[j+1] :

arr[j], arr[j+1] = arr[j+1], arr[j]

# Driver code to test above

arr = [64, 34, 25, 12, 22, 11, 90]

bubbleSort(arr)

print ("Sorted array is:")

for i in range(len(arr)):

print ("%d" %arr[i]),

Code Explanation for Bubble Sort

In this section, I am going to explain to you the working of the above Python code. Python Codes are generally very simple and easy to understand. The comments between the code lines are useful to understand what the particular section of the code does. So, let’s understand what is the code trying to do here.

- At the start of Program, the code selects the first two elements and compares them with one another.

- If the elements are in the wrong order, the program swaps their position.

- They are left unaltered if they are in the correct order.

- The same procedure is followed for all the alternate pairs of the list by the second FOR loop of the code.

- For example, 1-2, 2-3, 3-4, 4-5 ……. (N-1)-N.

- The first FOR loop helps to repeat the whole above steps N times on the list.

- At the last of this nested FOR loops, you will have your Sorted list.

You can reverse the order of this sorting algorithms i.e you can sort in descending order simply by changing the relational operator. Change the operator from smaller than to greater than in the 14th line of the Python code.

Time Complexity for Bubble Sort

The time complexity of the Bubble sort algorithm can easily be found by examining the for loops in the code. As you have seen in the Code explanation section above that the code used nested FOR loops. The first one to repeat procedure N times on the list and the second one to repeat the comparing and swapping for N-I-1 adjacent pairs. In the worst-case scenario (when the list is in reverse order), this algorithm would have to swap every single item of the array. Therefore, if we have n elements in our list, we would have n iterations per item – thus Bubble Sort’s time complexity is O(n^2).

As you can see that this code will run until the very end even if we have the sorted list at the half-way of the procedure. This will be time-consuming for the best-case scenario of our program where the list is already sorted. To fix it, you can introduce a Boolean flag inside the FOR loops. Our flag would be set to True on every iteration. And if anytime our list gets sorted midway, it will change to False and will break the loops.

Insertion Sort in Python

Insertion Sort is another sorting algorithms in Python of the same category as of Selection and Bubble Sort. To understand Insertion sort in a better way, let’s take the example of a deck of cards. When you have a randomly arranged deck of cards and want to sort it, what procedure do you follow? To sort a deck of cards, you start from the second card, remove it and insert it in its appropriate place. The same procedure is followed by this sorting technique to sort a list in a particular order. So, let’s see the sort list algorithm in Python. The code implementation of Insertion Sort in Python is given below.

# Python program for implementation of Insertion Sort

# Function to do insertion sort

def insertionSort(arr):

# Traverse through 1 to len(arr)

for i in range(1, len(arr)):

key = arr[i]

# Move elements of arr[0..i-1], that are

# greater than key, to one position ahead of their current position

j = i-1

while j >= 0 and key < arr[j] :

arr[j + 1] = arr[j]

j -= 1

arr[j + 1] = key

# Driver code to test above

arr = [12, 11, 13, 5, 6]

insertionSort(arr)

for i in range(len(arr)):

print ("% d" % arr[i])

Code Explanation for Insertion Sort

In this section, I am going to explain to you the working of the above Python code for the Insertion Sort algorithm for lists in Python. Python Codes are generally very simple and easy to understand. The comments between the code lines are useful to understand what the particular section of the code does. So, let’s understand what is the code trying to do here.

- The code starts with taking the second element of the list as Key.

- The code then compares the key with every element before the Key.

- For example, if your key is the element at index 4, then the code will compare it with the indexes 0, 1, 2, 3.

- With every comparison, the code swaps the key if it is smaller than the other element.

- The code repeats the same procedure to every element from index 2 to the last index of the list.

- The first FOR loop helps to repeat the procedure for all the elements of the list.

- The second FOR loop helps to compare and swap the elements in the list that are before our Key.

You can reverse the order of this sorting algorithms i.e you can sort in descending order simply by changing the relational operator. Change the operator from smaller than to greater than in the 14th line of the Python code.

Time Complexity for Insertion Sort

The time complexity of the Insertion sort algorithm can also be found by examining the for loops in the code. As you have seen in the Code explanation section above that the code used nested FOR loops. The first one to repeat procedure N-1 times on the list and the second one to repeat the comparing and swapping for all the elements before the Key in the list. Insertion sort takes maximum time to sort if elements are sorted in reverse order. And it takes minimum time (Order of n) when elements are already sorted. It uses an incremental approach to the problem.

In the worst-case scenario, an array would be sorted in reverse order. The outer FOR loop in the Insertion Sort function always iterates n-1 times. In the worst-case scenario, the inner for loop would swap once, then swap two and so forth. The number of swaps would then be 1 + 2 + 3 +. . . . . . + (N – 3) + ( N – 2) + (N – 1) which gives the Insertion Sort a time complexity of O(N^2).

MergeSort in Python

Merge Sort is a different sorting technique then all the techniques above. It is an external sorting, in which outside storage is required during the Sorting process to sort the elements of a given list. But this algorithm is very less time consuming than Selection, Bubble or Insertion sort. In this sorting algorithm, the list is first split into two halves. The code keeps on splitting the halves into other halves until you get multiple lists of single elements. After the dividing process, the code joins the single element lists by pairing them and sorting them during this join process.

So, let’s see the sort list algorithm in Python. The Python code for Merge Sort is given below.

# Python program for implementation of MergeSort

def mergeSort(arr):

if len(arr) >1:

mid = len(arr)//2 #Finding the mid of the array

L = arr[:mid] # Dividing the array elements

R = arr[mid:] # into 2 halves

mergeSort(L) # Sorting the first half

mergeSort(R) # Sorting the second half

i = j = k = 0

# Copy data to temp arrays L[] and R[]

while i < len(L) and j < len(R):

if L[i] < R[j]:

arr[k] = L[i]

i+=1

else:

arr[k] = R[j]

j+=1

k+=1

# Checking if any element was left

while i < len(L):

arr[k] = L[i]

i+=1

k+=1

while j < len(R):

arr[k] = R[j]

j+=1

k+=1

# Code to print the list

def printList(arr):

for i in range(len(arr)):

print(arr[i],end=" ")

print()

# driver code to test the above code

if __name__ == '__main__':

arr = [12, 11, 13, 5, 6, 7]

print ("Given array is", end="\n")

printList(arr)

mergeSort(arr)

print("Sorted array is: ", end="\n")

printList(arr)Code Explanation for Merge Sort

In this section, I am going to explain to you the working of the above Python code for the Merge Sort algorithm for lists in Python. Python Codes are generally very simple and easy to understand. The comments between the code lines are useful to understand what the particular section of the code does. So, let’s understand what is the code trying to do here.

- The list length is first checked to be greater than one.

- The code assigns the middle element of the list to the variable mid. Using this mid variable the code divides the list into two halves.

- Recursion repeats the same dividing function to the halves again and again.

- Recursion in the code repeats itself until the length of the lists is equal to one.

- The program then merges list pairs one by one.

- The code arranges the elements of the list pairs in a particular order during this merging process.

- For all the pairs the same procedure repeats until you get a single list with N elements.

- This sorted list then prints on the screen.

This algorithm works on the Divide and Conquer strategy. The time is greatly reduced by dividing the list into small lists and then sorting them. You can reverse the order of this sorting algorithms i.e you can sort in descending order simply by changing the relational operator. Change the operator from smaller than to greater than in the 15th line of the Python code.

Time Complexity for Merge Sort

As I told before, the time taken by the merge sort to sort a list in python is very less than the time taken by the above three Algorithms. Merge Sort is a recursive algorithm and time complexity can be expressed as following recurrence relation T(n) = 2T(n/2) + Θ(n). In the above code, instead of FOR loops WHILE loops are taken. There are 3 WHILE loops in the above Python code. The first one is to loop through every element present in the list pairs. The code then copies these elements to temporary lists. The other two WHILE loops check whether there are any elements left in the lists.

Due to the splitting of lists into two halves and merging pairs, the input being recursed is half of what was given. Like binary trees, this makes the time it takes to process grow logarithmically to n. Therefore the overall time complexity of the Merge Sort algorithm is O(Nlog(N)).

QuickSort in Python

Just like Merge Sort, Quicksort also follows the Divide and Conquer strategy. The difference between both the techniques is that Quicksort doesn’t need any external space to successfully sort the list. It is a type of internal Sorting. The Time Complexity of Quicksort is the same as that of Mergesort. In this Sorting technique, the code first assigns an element to the variable Pivot. Our first task is to take this pivot to its correct place in the list. The code does so by comparing and swapping all the smaller elements to the left of the Pivot and all the greater elements to the right.

This way the code also divides the list into two parts. The same procedure of assigning a pivot and then moving it to the correct place is repeated on both the halves recursively. This is repeated until the code fully sorts the list. So, let’s see the sort list algorithm in Python. The code implementation of Quick Sort in Python is given below.

# Python program for implementation of Quicksort Sort

def partition(arr,low,high):

i = ( low-1 ) # index of smaller element

pivot = arr[high] # pivot

for j in range(low , high):

# If current element is smaller than the pivot

if arr[j] < pivot:

# increment index of smaller element

i = i+1

arr[i],arr[j] = arr[j],arr[i]

arr[i+1],arr[high] = arr[high],arr[i+1]

return ( i+1 )

# The main function that implements QuickSort arr[] --> Array to be sorted, low --> Starting index, high --> Ending index

# Function to do Quick sort

def quickSort(arr,low,high):

if low < high:

# pi is partitioning index

pi = partition(arr,low,high)

# Separately sort elements before

# partition and after partition

quickSort(arr, low, pi-1)

quickSort(arr, pi+1, high)

# Driver code to test above

arr = [10, 7, 8, 9, 1, 5]

n = len(arr)

quickSort(arr,0,n-1)

print ("Sorted array is:")

for i in range(n):

print ("%d" %arr[i]),Code Explanation for Quick Sort

In this section, I am going to explain to you the working of the above Python code for the QuickSort algorithm for lists in Python. Python Codes are generally very simple and easy to understand. The comments between the code lines are useful to understand what the particular section of the code does. So, let’s understand what is the code trying to do here.

- Firstly, the code assigns the pivot variable to the largest element of the list.

- Index of the smallest element is also assigned in a variable i.

- Next using a for loop which is lopping through all the elements between low and high.

- The code arranges the list in such a manner that all the elements shorter than the pivot are at the left side of the pivot.

- And all the elements higher than the pivot will be at the right side of the pivot.

- This will set the pivot in its appropriate position.

- Pivot also divides the list into two parts.

- Next, the code repeats the same step on both sides of the list.

- The code repeats this step until all the elements are in their appropriate place.

You may feel that both the MergeSort and QuickSort works in the same manner by dividing the lists until there is only a single element left in each list. Yes, you are right. But, MergeSort requires external storage to completely sort the list. Thatswhy they are external sorting algorithms. Whereas, QuickSort does the same on the list itself. That is without the use of any external storage. QuickSort is also known as an internal sorting algorithm.

Time Complexity for Quick Sort

The time taken by the merge sort to sort a list in python is very less than the time taken by the above three Algorithms. Merge Sort is a recursive algorithm and time complexity can be expressed as following recurrence relation T(n) = 2T(n/2) + Θ(n). In the code, only one FOR loop swaps the element by comparing it with the pivot variable. With a good pivot, the QuickSort function would partition the array into halves which grows logarithmically with n. Therefore the average time complexity of the QuickSort algorithm is O(Nlog(N)).

The worst-case scenario is when the code always assigns the smallest or the largest element as the pivot. This would create partitions of size n-1, causing recursive calls n-1 times. This leads us to the worst-case time complexity of O(N^2). While this is a terrible worst case, Quick Sort is heavily popular because the average time complexity is much quicker. While the function utilizes FOR loops, it does comparisons on all elements of the array to make its swaps. As such, it has a time complexity of O(N).

HeapSort in Python

Heap Sort is one unique sorting technique available in Python. In this sorting technique, you use a tree-like data structure known as Binary Heap. A complete binary tree is a binary tree in which every level, except possibly the last, fills, and all nodes are as far left as possible. A Binary Heap is a Complete Binary Tree where items are stored in a special order such that value in a parent node is greater(or smaller) than the values in its two children nodes. The former is called max heap and the latter is called min-heap. The heap can be represented by a binary tree or array.

So, let’s now see how the HeapSort code looks like.

# To heapify subtree rooted at index i.

# n is size of heap

def heapify(arr, n, i):

largest = i # Initialize largest as root

l = 2 * i + 1 # left = 2*i + 1

r = 2 * i + 2 # right = 2*i + 2

# See if left child of root exists and is

# greater than root

if l < n and arr[i] < arr[l]:

largest = l

# See if right child of root exists and is

# greater than root

if r < n and arr[largest] < arr[r]:

largest = r

# Change root, if needed

if largest != i:

arr[i],arr[largest] = arr[largest],arr[i] # swap

# Heapify the root.

heapify(arr, n, largest)

# The main function to sort an array of given size

def heapSort(arr):

n = len(arr)

# Build a maxheap.

for i in range(n, -1, -1):

heapify(arr, n, i)

# One by one extract elements

for i in range(n-1, 0, -1):

arr[i], arr[0] = arr[0], arr[i] # swap

heapify(arr, i, 0)

# Driver code to test above

arr = [ 12, 11, 13, 5, 6, 7]

heapSort(arr)

n = len(arr)

print ("Sorted array is")

for i in range(n):

print ("%d" %arr[i]),

# This code is contributed by Mohit KumraCode Explanation for Heap Sort

In this section, I’m going to explain to you how the above code works. If you have read the code, you must have noticed that there are comments present above every 2-3 lines of codes. These comments will help you know what the lines of codes below it does. But still, for better and clear understanding, let’s see how the code is working. The first part of the code creates a function known as Heapify. This function will arrange our list of elements in a heap like structure. In this function, the code compares and swaps the elements in a particular order. This will bring the largest element at the root of the Heap. Next, the code swaps the root element with the last element in the array. The code reduces the size of the array by 1.

Next, the code implements the main function i.e the HeapSort function. in our main function, elements are extracted one by one from the top. Thus getting the smallest element at the beginning of our array. After doing this, the code calls the Heapify function again to arrange the elements into a max heap. This code continues until you get a fully sorted array in increasing order.

What this algorithm does is that it first arranges the items given in the array in the required heap as per the order of arrangement of items. The root node in this binary heap contains the largest element. Now, the code replaces this root element with the last element of the heap and then reduces the size by 1. After the previous step, the heap is Heapify again, which is arranging the items in a heap manner. The code repeats the last 2 steps as long as there are elements inside the list.

Time Complexity for Heap Sort

To calculate the time complexity of the HeapSort algorithm, you need to calculate the separate complexities of both the function it contains. The Heapify function and the HeapSort function. Let’s look into the time taken by both the functions one by one.

The Heapify function arranges the elements into a max heap and then swaps the root element with the largest element. In the worst case, the largest element is never the root element, this causes a recursive call to Heapify. But it may look like an expensive time complexity to you, but it is not. I have already told you that the Heap is a complete binary tree type data structure. That means for a 3 element list, the height of the tree will be 2 and for 7 elements, the height will be 3. This means the logarithmic value. So, the Time complexity of Heapify function will be O(logN).

All the important work in this algorithm is through the Heapify function. The HeapSort function’s work is only to iterate the whole code on each element of the list. So, if there are N elements present inside the list, HeapSort will iterate the Heapify function N times. This means that the Time complexity of the HeapSort function is O(N). So the combined complexity of our HeapSort algorithm will be O(NlogN).

Sort() and Sorted() – Python in-built Functions

When you are coding an algorithm or trying to solve a problem using programming, many times you may need Sorting algorithms to ease your work. But it may be very exhausting as well as time taking for you to write such big algorithms to sort a list. This will increase your time complexity as well as space complexity for your code. To save the programmers from this problem, every programming language has some pre-defined libraries or functions, which you can use to easily sort a list. These functions may be of one word or two.

So to sort a list in Python, you have two different functions pre-defined in your programming language. The first one is the Sort() function and the other one is Sorted() function. Both functions do the same thing. Both of them use a special Sorting algorithm known as Tim Sort. Tim Sort is generated by the combination of MergeSort and InsertionSort. To know more about the implementation of both of these functions in detail, navigate to the Real Python’s website. Let’s see how to implement both the functions in Python.

Sort() Function:-

>>>a = [1, 5, 2, 9, 1, 4, 3]

>>>b = [1, 5, 2, 9, 1, 4, 3]

>>>a.sort() //Increasing order

>>>b.sort(reverse = True)

>>>print(a)

>>>print(b)

Output:-

a = [1, 1, 2, 3, 4, 5, 9]

b = [9, 5, 4, 3, 2, 1, 1]

Sorted() Function:-

>>>a = [1, 5, 2, 9, 1, 4, 3]

>>>b = sorted(a)

>>>c = sorted(a, reverse=True)

>>>print(b)

>>>print(c)

Output:-

b = [1, 1, 2, 3, 4, 5, 9]

c = [9, 5, 4, 3, 2, 1, 1]

Time Comparison of different Algorithms

In this section, I am going to show you a brief comparison of various sorting algorithms of this article. I have already shown you the time complexities of all these algorithms separately. All the sort list algorithms in this article are a part of comparison algorithms. In comparison sorting, the code compares the elements of an array with each other to sort the array. Let’s see the time cases comparison of each algorithm of this article below:-

- Selection Sort:- Best, worst and average case complexities all are O(N²). N² which is independent of the distribution of data.

- Bubble Sort:- The average-case complexity is O(N²). Best-case complexity is when the list is already in the correct order. That will take O(N) time complexity Worst case is when the list is in reverse order. Time complexity, in that case, will be the same as Average time complexity.

- Insertion Sort:- Time complexities for Insertion sort is the same as Bubble Sort. The average-case complexity is O(N²). Best-case complexity is when the list is already in the correct order. That will take O(N) time complexity Worst case is when the list is in reverse order. Time complexity, in that case, will be the same as Average time complexity.

- Merge Sort:- Time complexity for Merge Sort is same for all the cases i.e Best, Average and Worst. Best, average and worst-case time complexity is O(NlogN) which is independent of the distribution of data.

- Quick Sort:- Worst case is when the array is in reverse order. The partition algorithm divides the array into two subarrays with 0 and n-1 elements. Therefore, the complexity is O(N²). Best and Average case complexities are the same i.e O(NlogN).

- Heap Sort:- Best, average and worst-case time complexity is the same O(NlogN) which is independent of the distribution of data.

Which Sort List Python Code is best for you?

So among all these algorithms present in front of you, which algorithm should you choose during programming? The algorithms with time complexities O(NlogN) are the fastest for you to use. But you don’t have to write all these algorithms in your code to use that. As I have already told you about the in-built Python Sorting function. They are the fastest and the easiest to use to sort a list in Python. So, all you have to do is use the single line code to sort any type of list while programming in Python.

So, that’s it for this article. I hope you have got what you want to find in this article. If you are having any difficulty or queries or doubts regarding any of the algorithms mentioned above. Feel free to mention them in the Comments Section below. Also if you want any other algorithm or any change in algorithms in this article, you can comment that below in the comments section. I’ll be more than happy to help you with it. I hope now you don’t have to search anymore ‘How to Sort a list in Python’ on the Internet.